Machine learning: one field giving voice to many

Willem de Vries

Similar to human language acquisition by infants, language learning by machines can be viewed as building a ‘language learning box’ that learns the words and sound units in the language it receives as input from its surroundings. | Illustration by Roulé le Roux, based on image supplied by Prof Herman Kamper

When Herman Kamper, a professor in the Department of Electrical and Electronic Engineering at Stellenbosch University (SU), speaks about his research on speech processing, its interdisciplinary nature is immediately evident.

The lens through which he regards his research is that of machine learning — a branch of artificial intelligence (AI) that enables systems to extract patterns and dependencies from data without being explicitly instructed on how to do so.

True to the nature of the machine learning field, Kamper’s research incorporates cognitive, engineering, and computer science and fosters international cooperation between universities, industry, and companies on topics as seemingly disparate as online gaming design and child language development.

The humanising of machines' inner workings

Kamper’s research is primarily focused on addressing engineering-related problems in the field of machine learning, rather than on the end applications.

More specifically, he aims to understand the nuts and bolts of advanced modelling to see whether existing algorithms may offer more to the field than previously thought. At the same time, it reveals how machine learning is being understood in increasingly humanising terms. In fact, the concept has become so prevalent that the Oxford Dictionary of English uses no inverted commas when describing the use and development of computer systems that are able to ‘learn’ and ‘adapt’ without following explicit instructions, purely by using algorithms and statistical models to ‘analyse’ and ‘draw inferences’ from patterns in data.

In Kamper’s work on language, technology moves even closer to mimicking human cognition as he joins other researchers in creating and refining advanced models that ‘learn’ language without intervention, in a way seemingly similar to a child’s acquisition of their first words.

Prof Herman Kamper | Photo by Stefan Els

Pathway to AI research

Kamper completed his master’s degree at SU under Prof Thomas Niesler, whose research focus includes speech and language processing, especially for under-resourced languages. (At present, English-language data sets disproportionally populate the field of machine learning.)

Kamper was first introduced to the field of language processing when Niesler invited Louis Ten Bosch, an associate professor in the Centre for Language Studies at Radboud University in Nijmegen, to SU. He remembers Ten Bosch giving “a talk on how they [i.e., his research team] were trying to use machine learning to essentially figure out how young children acquire language and, specifically, simulate that process by building machine learning models. These models, when fed speech, would then start to learn words.”

At that stage of his studies, Kamper already had a strong interest in machine learning and signal processing. For his PhD, he chose a topic that would force him to explore these interesting fields. A very specific engineering problem soon caught his attention: How do you get a machine to learn in a way that resembles the process of human language acquisition?

The scope of his studies widened when he connected with one of his supervisors in Edinburgh, Prof Sharon Goldwater, “who came from a more psychological and cognitive modelling background and was interested in pivoting towards speech technology”.

Kamper went on to obtain his PhD in 2017, at the University of Edinburgh. The topic of his dissertation was unsupervised neural and Bayesian models for zero-resource speech processing. After his doctoral studies, Kamper did postdoctoral research at TTI Chicago, working on unsupervised speech processing and machine learning that combine speech and vision.

Today, Kamper’s research focus is on using machine learning for speech processing so as to allow machines to acquire human language autonomously, with as little supervision as possible. He explains this process as follows: “One of the things we often do — and this is a very basic example — is give the model a piece of speech and tell it to compress this in some way and then try to reconstruct that same speech for us. This is what is called ‘reconstruction loss’. Or we tell the model: Here’s a piece of speech; predict what comes next. This works very well, and it’s basically how, for example, ChatGPT functions. Except we do this with a speech signal instead of text.

“We try to figure out what we need to put or build into the model, or what problem we need to get the model to solve. Then the model learns to solve that task, and we see what additional things the model can do.”

This aspect of the work also came to bear when Kamper was actively involved in Masakhane, a grassroots pan-African machine learning project that focuses on creating solid data sets for and in especially under-resourced African languages.

“Masakhane was a very big thing in 2019 in Kenya at the Deep Learning Indaba, where it actually started,” says Kamper. “The Deep Learning Indaba is a training initiative that tries to do something somewhere across Africa every year, bringing hundreds of students together, and within a week, they upskill them in what AI is. At that point, Masakhane was very focused on machine translation of African languages. I built a small translator from English to Afrikaans. From then on, it just continued to grow.”

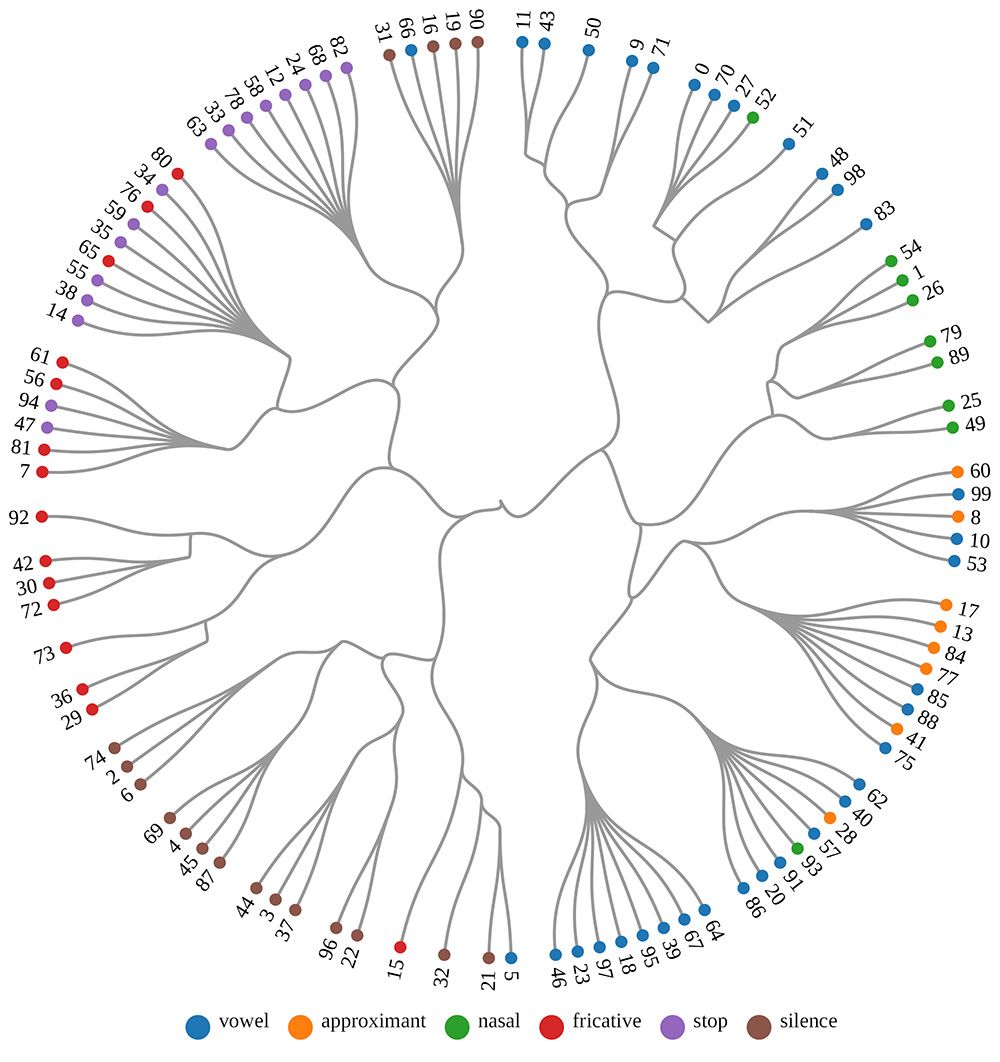

An illustration of how the units (numbered) that are discovered automatically by a neural network correspond to types of sounds in spoken languages.

Image courtesy of Prof Herman Kamper

Computation and cognition

What does cognition mean in the context of computer science, and how does it relate to Kamper’s work?

In the late neuroscientist David Marr's work in cognitive science, he isolated three levels of cognition. First, there’s the computational level at which the problem the system is trying to solve is defined and its goals are set. Next, the algorithmic level describes the algorithms used and in what method. Finally, the implementational level deals with the hardware — the realisation of the system — whether it’s biological or a computer. “You really need to specify at which level you are conducting research,” Kamper says.

“Also, you must remember that the insights you gain at the different levels enforce each other. A simple example of this is a calculator. An abacus can perform addition and subtraction, just like my silicon computer does, in the sense that it can achieve the same thing. The algorithm could even be the same. I could write a little program in Python [a programming language] that precisely follows the path of the abacus, but the hardware is completely different — there are electrons flowing through it, whereas with an abacus, I’m sliding objects around.

“Most machine learning researchers would still say that we don’t really have anything substantial to say about consciousness, though. It’s poorly defined, and we don’t really know what the definition is, but the fact that many people are thinking about it is true.

“It’s really a fascinating question. But at the same time, we still need to be concrete, both when doing scientific work and when building engineering applications.”

Language acquisition

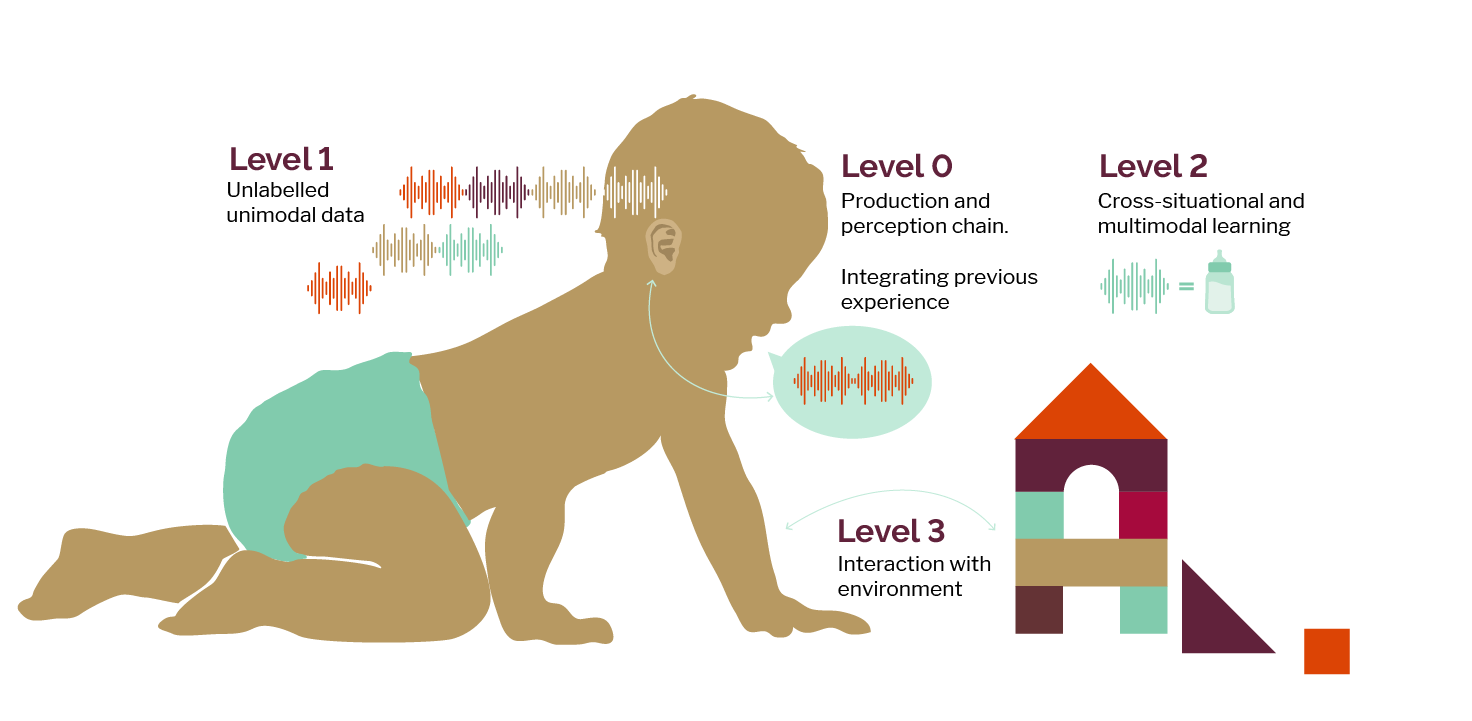

One goal of Kamper's research is to develop machine learning methods that mimic the way that human infants acquire language. From developmental studies, we know that this is based on information from different sources (the levels in the figure below). This research includes work on all three levels, says Kamper.

Illustration based on image supplied by Prof Herman Kamper

An essential laboratory for ideas

With regards to AI and machine learning in an academic engineering context, Kamper acknowledges that it is simply impossible for the modern university to compete at scale with the advances in industry.

The unequal distribution of technological advances between academia and industry has required universities to recalibrate and adopt an agile approach to what’s happening in the field of machine learning and to how research is done, he explains.

For him, the strength of his academic work lies in its mostly experimental nature and focus on new or under-resourced avenues of research that the OpenAIs and Googles of the marketplace aren’t yet capitalising on.

Even though industry’s walled garden and intellectual wealth place it at a remove from the academic world, it is vital that research and engineering academics stay the course and embrace engineering as a laboratory for ideas, he says.

Kamper is especially interested in the key role of simple foundational approaches in the methodology for researching algorithms as they become ever more complex and knowledge and data sets’ quality are distributed through various channels. The faster and more complex the models become, he believes, the more necessary it is to see if simpler approaches can be applied to solve new tasks.

Image from Trackosaurus website

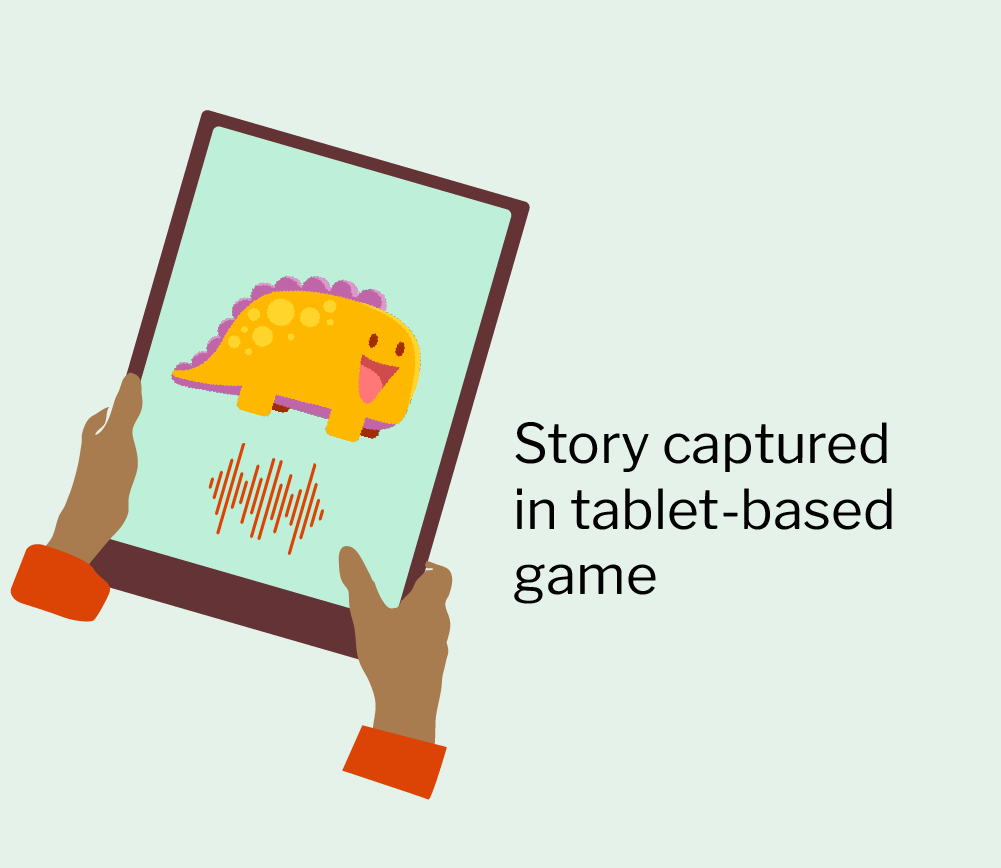

Tackling literacy in South Africa

Kamper is involved in a literacy project with a company called Trackosaurus, in which they analyse the way preschool children tell stories as an indication of their likelihood of having learning problems later in life. “We got a small sub-grant from the Gates Foundation to do this, and it’s super interesting. I’ve always wanted to do something in literacy.

“Young children’s storytelling abilities can be good predictors of whether or not they will have reading problems later on. The big problem is if you can’t tell a story and you don’t understand the flow of a story — the beginning, middle, and end — then it becomes difficult to read effectively later on.”

The project is aimed at preschool children in large classrooms in Mpumalanga’s SiSwati communities. Teachers there can have more than 40 children in front of them and not know who is struggling with what. “The idea [of the project] is that the children must tell a story to a tablet. Trackosaurus specifically has a maths game. The project allows the children to spend 20 minutes with the maths game once a week, and it then tells the teacher which children are struggling with specific things.

“What we’re now trying to do — and this is very far off — is to get the children to tell a story within the game as part of a storytelling exercise with guidance.

“We’re working with [education specialists] Prof Daleen Klop and Annelien Smith at SU. They’ve already done this experiment in an in-person setting. They sat with children and then said, ‘Tell a story’. Afterwards, they would analyse whether or not each of the children had gotten the structure of their story right and then, on grounds of the data, predicted their future ability to discern the flow of a narrative .

“Currently, we are creating prototypes [of the literacy testing software for the Trackosaurus application] built on the Afrikaans and Xhosa data sets that Annelien and Daleen have collected. Then we’ll redo it in SiSwati. Trackosaurus works very closely with the Department of Basic Education in Mpumalanga. We’re currently trying quite hard to involve other people from other universities as well.”

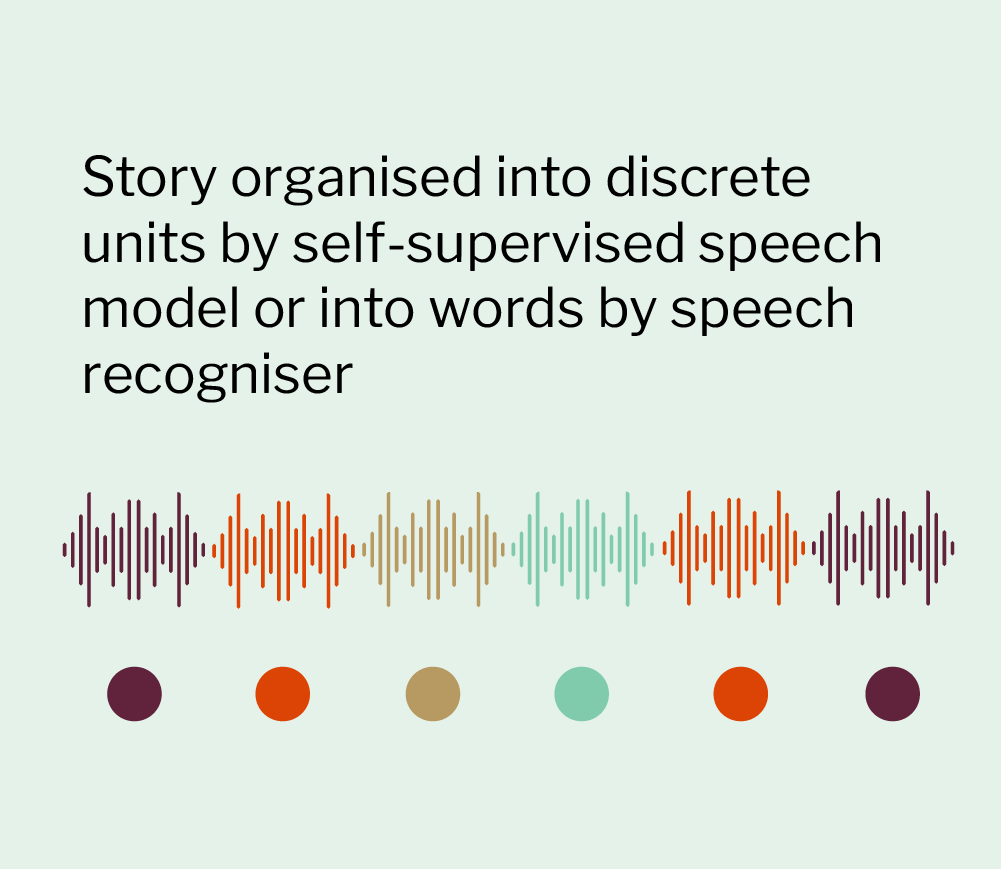

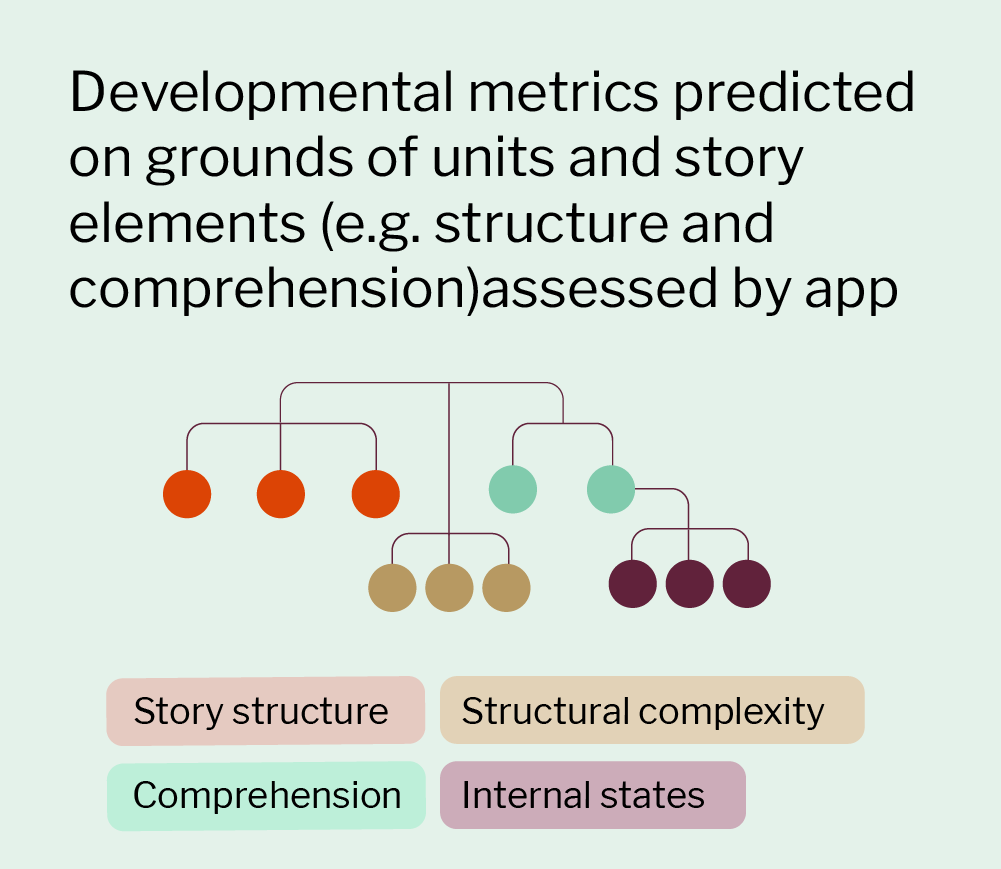

Story telling metrics

One of the applications of the storytelling analysis is to use what the researchers learn and subsequently develop to assess children’s language development annually. This diagram illustrates one approach to analysing stories told by kindergarten children.

Turning theory into application

Although Kamper is excited to see how aspects of research into human language acquisition by machines (with minimal intervention) gain a foothold in other disciplines, his interest in this specific topic remains scientific as he focuses primarily on problem-solving in the field.

“My big research agenda essentially is that I want to build a ‘box’ that takes in spoken information from all over, and then starts making sense of it. That agenda is a very scientific one because if we get such a box and we know that the mechanisms inside it are similar to those that children use, then we can study that box as a model in ways that we can’t study children.

“It’s a very scientific endeavour, and to be successful, you must solve difficult engineering problems.”

Kamper and his student teams’ research has had some unexpected results. “For example, half of my students work on tasks like voice conversion or voice generation, which involves creating unique voices for characters in games. So, we work with Ubisoft, a gaming company (I’m not a big gamer, but my students are) and we’re addressing incredibly challenging speech technology problems. We have, for example, models that try to learn small bits — the phonetic units of a language — and then we realised, ‘Oh wow, we can actually use these phonetic units to convert one voice into another!’ And you don’t need a super complicated system to do it. So, we have written a paper, ‘Voice conversion with just nearest neighbours’, which describes a super simple technique. We wouldn’t have been able to do that if we didn’t have this kind of experimental research agenda.

“In South Africa, for a relatively small academic community, we used to have a very large research cluster for speech recognition and speech synthesis. In the past, people from the signal processing engineering field who were interested in those topics then also started taking an interest in machine learning and AI before gradually moving into these fields.

“Many people who come from a speech background have moved towards applied machine learning, likely because there is more industry interest. Someone like Prof Thomas Niesler’s work on tuberculosis detection, and Prof Jaco Versfeld’s work on the detection of whale species on grounds of their song form part of the field.” (Niesler investigated how to detect tuberculosis through the automated analysis of cough sounds. Versfeld and his team used passive acoustic monitoring and machine learning to recognise and distinguish between individual inshore Bryde’s whales.)

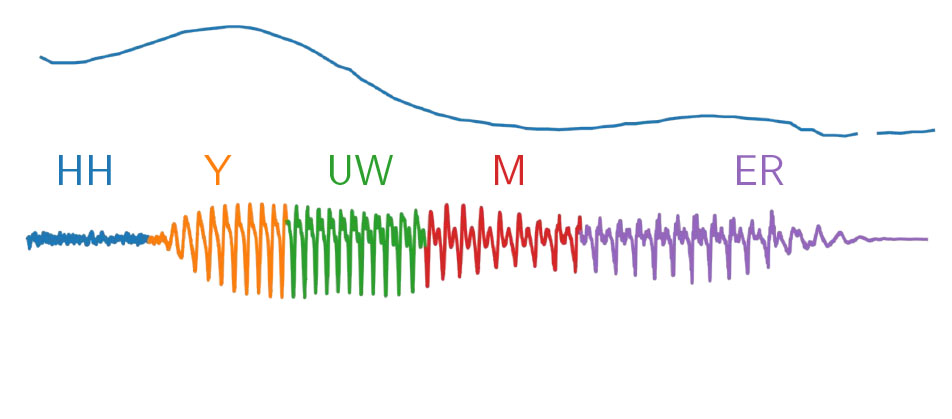

Speech signal

To build speech technology, researchers need methods that can disentangle speech signals at several different levels. Kamper is researching whether big neural network models developed by companies like Meta can be used for disentanglement.

Image courtesy of Prof Herman Kamper

The big shift

“If you look at developments [in generative AI] over the last three years, everything is coming out of enormous research labs like Google DeepMind and OpenAI. They are the ones driving these advancements. It’s not coming from small labs anymore. Those big labs, I must say, are also all running at a loss.

“The big shift happening now is that we [in academia] take what these big players are doing and try to see how we can use and adapt it and figure out what the strategies and techniques are, the modelling approaches and the mathematics behind it — everything needed to take what these big players are doing, bring it here, and then use it to solve [engineering] problems, whether they are things like voice conversion with very little data or figuring out how the methods could be used to set up a cognitive model of how a person learns.”

Kamper is of the opinion that GPT [which is short for ‘generative pre-trained transformer’, a kind of AI language model] “is not a complicated model compared to some of the other stuff, but I think we in academia shouldn’t try to do the same things that people in industry are doing. We don’t have the big computers needed to train these models. So, we can take what they’re doing, but then, crucially, we need to explore it widely.

“We must try weird, strange things, all over the place. And then the industry must hyper-optimise and fund it, and make that model super good.”

Teaching as a learning experience

Although juggling academic work and the raising of a young family can be daunting, Kamper’s resolve is firm when he speaks about his commitment to teaching.

“I teach a course in the structured master’s programme in machine learning and AI, which isn’t in my own faculty — it’s in applied mathematics in the Faculty of Science. What I try very hard with my students here at Stellenbosch is to open it [i.e., our research endeavours] up to people outside the University. The things we’re doing here for students can also benefit a wider community, so I share many of my lecture videos publicly. Funnily enough, the students still come to class. The keen students attend both. They get different inputs and insights from the live lectures and videos. This has been quite fascinating to me.”

Working with like-minded researchers locally and in Edinburgh, Canada, and Romania, Kamper has also written articles with Willie Brink in applied mathematics, and Steve Kroon for computer science among others. His collaborative approach also sees him invite students from other labs, “and they actually come and sit here and are part of the group even if I’m not explicitly supervising their study”.

As an undergraduate student, Kamper liked the idea of a machine that improves with experience, so there were two fields he considered for further study: machine learning and computational electromagnetics.

“So, I went on and started with speech recognition, and from there I was very fortunate to continue exploring. I never thought I would work on anything involving the generation of language. My students started digging into that, and suddenly I learned new things and made it look like I knew something about it. It was entirely accidental and very rewarding.

“I’m currently on my sabbatical, and one of my students is now my advisor. He tells me what to do, and then I implement it and I find this really rewarding.”

Though undergraduate students change course time and again, his postgraduate students “are all quite excited” about the possibilities and research in the field.

“I think it’s a very exciting time because I think we’re going to be able to solve problems that we couldn’t before. For me, what’s always interesting about such a transition period is that you must think much more about why you’re doing it and basically where your focus is. We must ask ourselves what the blind spots are. The process of figuring out where we need to look is fascinating. Students like it too.

“And it doesn’t have to be engineers. With GPT someone who knows nothing about machine learning can solve a problem. I’m quite excited to see how people are going to use it.”

The research initiatives reported on above are geared towards addressing the United Nations’ Sustainable Development Goal number 4 and 9, and goals number 1, 2 and 18 of the African Union’s Agenda 2063.

Useful links

SU’s Department of Electrical and Electronic Engineering

Prof Herman Kamper’s list of publications

Prof Herman Kamper’s YouTube channel

X (formerly Twitter): @hermankamper, @MatiesResearch and @StellenboschUni